Plug the value leak: Fix your drilling data

Why do drilling automation systems, real-time operating systems, and drilling analysis and design systems fail so often, or deliver less value than expected, when analogies from other industries show that these approaches have significant, clear and present value? The first and foremost answer is surprisingly simple—all drilling systems rely on data that are demonstrably bad. If we could supply our tools with the necessary and sufficient quality data, our controls, analytics and engineering would be much more trustworthy, both in the office and in the field.

With the combination of harsh environments, complex well geometries and shrinking economic margins (especially in the U.S. land market), a growing importance is now being placed on the accuracy of data delivered from pressure, pit level, hookload, torque, flowrate, and other equally indispensable, yet largely antiquated, rig sensors. Another equally important, but perhaps not as well understood opportunity is the “Big Data” movement taking root in the industry. As we attempt to apply new, complex analytical methods, we realize that data quality is now much more important. Whereas most conclusions could, previously, be reached stochastically, or through less sophisticated methods, complex drilling analytics must be physically consistent. Thus, it is important to cleanse the data a priori, and in real time, when possible and practical.

Many E&P companies have begun to take a critical look at this aspect of their operation; however, they have focused largely on sensor capability and new sensor technology. What is largely missing is a method to detect sensor faults and failures in real time, and the ability to estimate the correct value of a measurement when an error occurs in their existing sensor networks. This article discusses an oil field-specific application of a proven analytical technology, used in ultra-critical U.S. Office of Naval Research systems.

The collaborative initiative takes the form of the next-generation Sentinel Real-Time (RT) system, which leverages a Bayesian probabilistic network model. This provides operators with an unobtrusive methodology that high-grades real-time data streams, and identifies existing and impending sensor and/or process faults. Thus, the elevated confidence in the fidelity of real-time data sets facilitates sound decisions that foster safer, more cost-effective well delivery, with reduced instances of non-productive time (NPT) and invisible lost time (ILT). The latter two items, together, have been shown to account for up to 50% of total drilling time.

Specifically, the powerful data analytics engine links all surface and subsurface sensors into a holistic, self-auditing network, wherein each sensor checks off the others to determine if they are malfunctioning, Fig. 1. Once a defect is exposed, before any inferior data are fed into the real-time event detection and data analytics software, it can be replaced with properly calibrated, predictive values, based on the model that links all the sensors together.

Along with the obvious, and very important, safety and environmental safeguards (think pressure, flow and pit volume sensors used for maintaining well control), the capacity to isolate a sensor fault makes the system ideally suited for condition-based monitoring and maintenance. By providing uninterrupted oversight of rig activities, be it for the rig in its entirety or individual components, the technology provides early alerts for equipment deficiencies, allowing ample time for intervention before the failure of a key component and the resulting NPT.

Moreover, due to its intrinsic redundancy and, subsequently, higher confidence in data integrity, the patent-pending system is well-positioned as an enabling technology for drilling automation. With the technology functioning as a virtual guard dog, it prevents the potentially catastrophic inclusion of inferior data in critical control algorithms.

BAD DATA EPIDEMIC

The industry’s grappling with data volumes that have reached epic proportions has become the subject du jour these days.1 However, comparatively less focus has been placed on validating the quality of the data sets being mined. When it comes to well construction, which continues to rely mostly on rig sensors introduced decades ago, the time-worn truism “garbage-in, garbage-out” is particularly relevant, leaving operators little choice but to cross their fingers and hope for the best.

Unfortunately, relying on best-guess scenarios can lead to real-time decisions that are based on bad data, thereby amplifying the risks of disastrous safety and economic implications. Further, the importance of high-integrity data has become even more magnified by the increasing number of centralized operating centers established to remotely monitor offshore and, more recently, onshore drilling operations.

The recent acceleration in adoption of advanced surface and downhole sensors was intended to enhance safety and reduce NPT, but along with higher sampling rates that make human real-time monitoring enormously difficult, a contagion of substandard data continues to stream unabated through rig analytical software. Recent studies by Chesapeake Energy suggest that upwards of 50% of the rig sensors in service are delivering inaccurate data, with sensor reading variances of up to 50%, high or low, compared to a correct, calibrated reading.

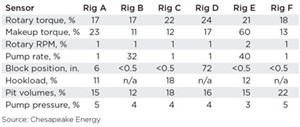

As a case-in-point, the inherent value of a technology capable of instantly recognizing improperly calibrated data and replacing it with the correct figures was reinforced by an independent operator’s collection of data from eight primary surface sensors, on six rigs, in its onshore drilling fleet. What it found was average errors, from bad calibration, of up to 60% in the case of makeup torque, with only the RPM sensors shown to be consistently within the proper calibration specifications, Table 1.

MODEL CONSTRUCT

The concept of developing a seamless methodology, with intrinsic relational redundancies to validate drilling sensor data, was germinated within the Drilling and Rig Automation Group of the University of Texas at Austin’s (UT Austin) Department of Petroleum and Geosystems Engineering.2 The original version of the holistic data validation technology was developed and matured through funding by the U.S. Office of Naval Research, which sought technology to verify the accuracy of sensor data in critical electro-mechanical actuator systems, in submarines and ships.3 With UT researchers exploring technology transfer opportunities in the civil, mechanical, and aerospace engineering disciplines, it was recognized quickly that the validation software used effectively in the defense sector had direct implications for improving the safety and economic performance of oil and gas drilling operations.

What resulted was a behind-the-scenes model that integrates all rig sensor data with built-in variables capable of instantly identifying sensor and/or process faults, as well as malfunctioning equipment. Since all the sensors are joined in an integrated network, the cross-checking mechanism ensures all are in proper working order. Specifically, the now-transferred technology is built around a Bayesian network model of sensed parameters to facilitate easier and complete enumeration of all the relational redundancies inherent in a particular system.

Processed from commonly used WITS, Modbus, and WITSML data transmission standards, all the information collected is closely related with the built-in redundancies detecting any anomalies, either with the individual sensor or with the top drive, mud pump, mud motor or other rig component. Once intertwined as a seamless network, all of the sensors are logically linked together, thus the transmission of independent, or unrelated, measurements would suggest one or more of the sensors are either not relaying the proper data, or something is amiss in the process.

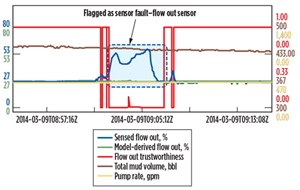

For instance, if mud is flowing in at a certain rate, it also should flow out at the same rate (assuming no kick or lost circulation scenario), with any deviation from that norm likely indicating a faulty flow out sensor, Fig. 2. Once the fault is identified, the Bayesian network derives a very close approximation of what the actual flowrate should be. In that same way, the network model would pick up process faults, such as kicks, lost circulation and other drilling dysfunctions, and would be able to alert the user accordingly.

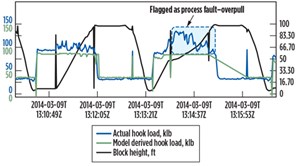

Consider, for instance, the case of a process fault, such as an unexpected tensile load, as indicated by the hookload sensor, which degrades the process confidence level to zero. In this particular case, the software would raise an alarm of a potential overpull condition, representative of a pipe sticking event, rather than a defective sensor, Fig. 3.

Most importantly, with the steadily increasing development of control algorithms to advance automated drilling, tripping, and managed pressure drilling (MPD), which key off the assimilated data, the value of knowing if a sensed data can be trusted, cannot be understated.4 Here, trustworthiness carries enormous weight, considering the repercussions should automated decisions be based on inferior data being included in the control algorithm. As such, the new validation software continually re-processes the data and checks whether they are trustworthy, or should be discarded before the data can be engineered into an automated closed-loop control routine.

Furthermore, the system’s modularity makes it extremely flexible in accommodating the wide variances in number and type of sensors used from rig to rig. The model architecture is such that it can be easily scalable to meet individual requirements and objectives, either for use on only the top drive, fluid circulation or other subsystems, or a single network that encompasses all of the rig’s surface and subsurface sensors.

While the robust system enhances the value derived from simple surface sensors without the addition of sophisticated downhole telemetry data, it is easily updated to include logging-while-drilling (LWD), measurement-while-drilling (MWD) and other downhole measurement tools.

RIG NPT MINIMIZATION

Breakdowns on the rig involving key pieces of equipment not only can jeopardize well integrity, but also, as Fereday5 and others have noted, represent one of the primary causes of NPT. Typically, operators and contractors are at the mercy of planned maintenance schedules that are based on the equipment’s specified operational life expectancy, workloads, and targeted operating environment. However, failure risks are compounded appreciably, when maintenance is performed in accordance with parameters that may be inaccurate or unrealistic, given ever-changing drilling conditions, unforeseen events or revised well objectives.

However, the continual oversight of sensor output throughout the actual drilling process delivers a proactive, condition-based response that goes beyond data quality by flagging rig or equipment deficiencies well before catastrophic failure occurs. Furthermore, the advanced algorithms written into the validation model consider all the possible data generated by the system to computationally triangulate how much useful life remains in a particular piece of equipment. Ultimately, these decisions eliminate failure-related NPT, or at least minimize the duration of downtime for equipment repair or replacement.

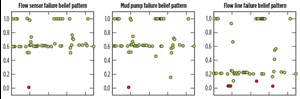

Since the faults identified in different rig components also generate different belief patterns, the holistic unification of all the sensors enables immediate isolation of the problem area, which would be very difficult, if not impossible, if only a single sensor was considered, Fig. 4. Again, in the case of a flow line sensor, the network would determine, in real time, if a fault was in the sensor, the mud pump or the flow line (i.e. leakage). Consider also that if the calculated real-time efficiency of the mud pump was shown to be deviating from its nominal value, the identified efficiency decline would strongly suggest deterioration, giving personnel plenty of lead time to make fact-based decisions, on when to take the mud pump off-line for repair or replacement.

DRILLING EFFICIENCY AND OPTIMIZATION

More advanced sensor arrays, employed nowadays in the more critical drilling applications, deliver multiple sensor sampling rates that are extremely difficult, if not impossible, to humanly digest for real-time decisions. Therefore, at a time when low commodity prices make operators especially keen on any improvement that can help maximize asset value, opportunities to optimize drilling efficiency can be overlooked. Until now, real-time decisions to improve efficiency fell mostly on the most experienced driller and the individual intimately familiar with a particular rig and drilling theater.

Hand in hand with condition-based monitoring, the data-validation algorithms pick up deviations that can directly impair optimal efficiency, be it with the mud motor, bit and other BHA components, or else with a drilling (process) dysfunction, such as a sensed increase in axial, lateral or torsional vibrations. By tracking and analyzing RPM, torque, weight-on-bit, differential pressure, and rate of penetration data, the holistic analytics engine can isolate, for example, if the mud motor is defective, or if there is a process fault, such as a dull bit. Through continual updating of industry-standard, Mechanical Specific Energy (MSE) calculations used for performance evaluations, the software provides objective assessments for real-time efficiency improvements.

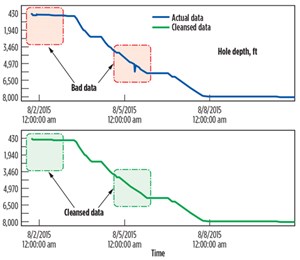

Along with optimizing real-time drilling efficiency, the software also provides beneficial insight to aid in field development. Generating reports for individual wells, the analytical software processes historical drilling data, which are improved further by identifying, engaging, terminating, and ultimately, replacing faulty data with cleansed values derived from the model, Fig. 5. Hence, operators can use the final report to optimize post-drilling performance analysis, amplify data quality for analytical purposes, and enhance future well planning. Specifically, such reports also provide indication of sensors that need to be scheduled for re-calibration, or even require replacement.

Currently, the software is in play analyzing operator data sets, cataloging bad data, and furthering the business case for improving data quality for increased drilling efficiency and safety. ![]()

REFERENCES

- Eaton, C., “Oil patch works to harness a gusher of ‘Big Data,’” Houston Chronicle, May 10, 2015.

- Ambrus, A., P. Ashok, and E. van Oort, “Drilling rig sensor data validation in the presence of real-time process variations,” SPE paper 166387-MS, presented at the SPE Annual Technical Conference and Exhibition, New Orleans, La., Sept. 30-Oct. 2, 2013.

- Krishnamoorthy, G., P. Ashok, and D. Tesar, “Simultaneous sensor and process fault detection and isolation in multiple-input-multiple-output systems,” Systems Journal, IEEE, vol.9, no.2, pp. 335, 349, June 2015.

- Ambrus, A., P. Pournazari, P. Ashok, R. Shor, and E. van Oort, “Overcoming barriers to adoption of drilling automation: Moving towards automated well manufacturing,” SPE-IADC paper 173164-MS, presented at the SPE/IADC Drilling Conference and Exhibition, London, UK, March 17-19, 2015.

- Fereday, K.,BP, “Downhole drilling problems: Drilling mysteries revealed!,” presented at American Association of Professional Landmen (AAPL) Annual Meeting, San Francisco, June 13-16, 2012.

- Coiled tubing drilling’s role in the energy transition (March 2024)

- Digital tool kit enhances real-time decision-making to improve drilling efficiency and performance (February 2024)

- E&P outside the U.S. maintains a disciplined pace (February 2024)

- Prices and governmental policies combine to stymie Canadian upstream growth (February 2024)

- U.S. operators reduce activity as crude prices plunge (February 2024)

- U.S. producing gas wells increase despite low prices (February 2024)

- Applying ultra-deep LWD resistivity technology successfully in a SAGD operation (May 2019)

- Adoption of wireless intelligent completions advances (May 2019)

- Majors double down as takeaway crunch eases (April 2019)

- What’s new in well logging and formation evaluation (April 2019)

- Qualification of a 20,000-psi subsea BOP: A collaborative approach (February 2019)

- ConocoPhillips’ Greg Leveille sees rapid trajectory of technical advancement continuing (February 2019)