Information Technology

New capability in personal and high-performance computing provides choices

E&P professionals welcome business and science gains delivered through mainstream high-performance computing within departments, workgroups and at the deskside.

Mark M. Ades and Marisé Mikulis, Microsoft, Houston

One thing that many technical professionals dread is standardization. They don't want to be constrained. It limits creativity. They see it as the enemy of competition. And yet, what is the geoscientist's and the business manager's most common gripe? "I have too much software that's not standardized. I'm spending too much time and money on middleware to get different applications and platforms to function smoothly." It's not strictly an either/or situation. Individuals have individual needs. But the business case demands solutions that integrate as easily and reliably as possible across business and science applications. Thus, standardization must play a key role.

Less than a decade ago, parallel computing on clusters of small, yet powerful computers changed the computing landscape. This allowed large computational problems to be divided among a number of linked computers and solved somewhere other than a massive, central computing facility. Recently, High-Performance Computing (HPC) clusters reached the department and even workgroup levels in some instances; so, E&P software providers have been rewriting code to fit the parallel processing environment, where practical.

Given E&P business drivers and operating challenges, this new computing capability will lead to increased oil and gas production and improved return on investment.

THE SCIENTIFIC PERSPECTIVE

After years of cost-cutting, operating companies find themselves lean on professional staff and carefully choosing expenditures on technology or outside services.

For a long time, geoscience and engineering capabilities have outpaced affordable technologies, meaning, given the resources, geoscientists have known how to solve many E&P challenges, but the supporting technologies required to have simply been too expensive to distribute widely. "Good enough" solutions have had to suffice in many cases.

Reservoir engineering is a case in point. The supercomputers of the 1960s and ‘70s were the realm of massive simulations undertaken to estimate petroleum reserves and unravel complex subsurface producing regimes, but only projects with the very highest potential return could afford such thorough analyses.

In recent years, improved and relatively more affordable computing technologies have allowed fine-grain reservoir engineering solutions to be applied more routinely, but the problems to be solved have grown in complexity as well. Activities such as dynamic, time-based fluid modeling and closed-loop analytical processes are absorbing increased computing capacity and performance as fast as it is made available. Petroleum geophysicists, of course, also had to compromise and, in some sense, they always will. There will always be problems that require ever more powerful computers, always more questions than answers. But excellent progress in computer capability has been made.

Each E&P discipline is accustomed to specialized operating systems, interfaces and software applications built especially for their unique datasets. Recently, more thought has been given to an optimal business solution that would allow all E&P professionals to conduct technical computing, including HPC, routinely on the same operating system as their e-mail, presentations, reporting, dashboard and the like.

The clusters since 1995, while more favorably priced, are still not truly optimal for our work. These UNIX- and Linux-based systems, no matter how "open" they might be, still require a great deal of time and expertise to operate and implement additions. This complexity requires users to learn and support additional operating system platforms and introduces challenges for things like authentication, security and interoperability.

SOLUTIONS

"The E&P market is ripe for truly personal HPC or personal supercomputing," comments Mike Kenney, senior geoscientist with mid-sized Coldren Oil and Gas Company LP. "The volumes of seismic data being analyzed by geoscientists have grown tremendously. Multiple 3D volumes are used routinely for structural, stratigraphic and pore-fluid detection. Most large- and mid-cap majors and independents have previously used in-house Linux clusters to apply certain seismic processing methods to their 3D seismic surveys, but they also outsource to evaluate ‘best of class' algorithms, too. In today's geophysical marketplace, you have to get in line for processing services and, naturally, processing service costs are rising. Availability and turnaround times are lengthening as well."

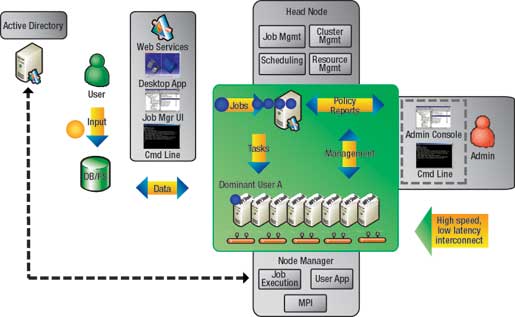

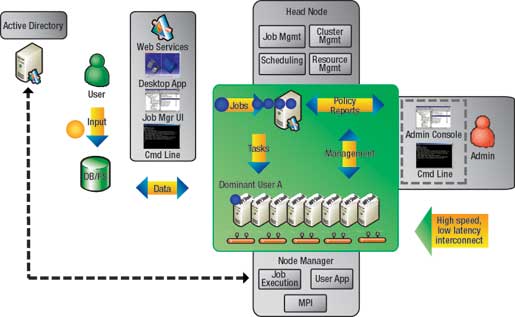

Kenney continues, "Now, there is no ‘silver bullet' in oil and gas exploration. Oil and gas companies constantly strive for technological advantages. HPC clusters and parallelized software have become a new option to perform computationally intensive processing tasks, (Fig. 1). Instead of outsourcing, processing tasks are performed right in the geoscientist's office and on his/her time schedule. This creates a competitive advantage and is one factor that helps reduce cycle time, so drilling can start sooner."

|

Fig. 1. Windows Compute Cluster Server 2003 architecture. A Head Node controls and mediates access to cluster resources and manages, deploys and schedules jobs to processors (compute nodes) within a cluster (supporting Perl, FORTRAN, C/C++, C#, and Java). The existing corporate infrastructure and Active Directory are used for security, account and operations management.

|

|

Kenney explains that geoscientists are becoming smarter at reducing drilling risk with seismic. As an example, routine extraction of time-frequency spectral amplitudes from full-stack, broadband seismic volumes produce a large number of 3-D volumes that, when interpreted in combination with other 3-D data types, can reveal geologic details obscured in the full-stack data sets. These transforms can be computationally intensive and, depending upon the type used, are often outsourced. "With the HPC cluster option," says Kenney, "we can perform these processing tasks with a faster turnaround and control schedule, too. We're all working as fast as we can. We've never experienced a market quite like this."

Customer demand for accessible HPC within E&P and elsewhere is being driven by increased performance in processors per compute node, the low acquisition price per node (one compute node equals four processing cores), and the overall price/ performance of compute clusters, which continues to improve. We have our kids, the gamers, to thank for that.

Technology market analysts at the consultancy IDC say the high-performance and technical computing (HPTC) market grew approximately 24% in 2005 to reach a record $9.2 billion in revenue, which is the second consecutive year of 20%-plus growth in this market. The HPC cluster market share continued to show explosive growth, representing over 50% of the HPTC market revenue in the first quarter of 2006.1 IDC indicated that HPC clusters in the lower-end capacity segments of the market will see substantial customer adoption in coming years.2

EASIER TO WORK WITH

As the benefits of parallel computing on clusters has come to light during the last decade, E&P software vendors have been porting their products over to this environment. As Dan Herold, vice president of seismic software provider Parallel Geoscience Corp., reveals, "Small clusters have been around for a while in E&P, and we have seen that market grow, but they largely have been Linux-based. Now, Microsoft is providing another option to customers that would eliminate the cost of Linux administration – set-up, optimization and maintenance – which should spur interest in HPC further."

Specifically, Herold points out that he has been able to install his company's seismic processing software in a remarkably simple and quick manner, as compared to what is usually a two- to three-day job in a Linux environment. "I've done dozens of Windows installations, in fact, that came up in only ten minutes," he explains, "whereas, I did a Linux installation in Moscow recently that took five days because of all of the customization required in that environment. With Windows, you drop it in place and it runs. Standardization pays off."

Parallel Geoscience will have all of its software applications, including highend wave migration code that runs on 40 to 256 nodes, running on Windows by December 2006. "Deskside clusters are an excellent opportunity for small- to mid-sized companies who don't want to make the investment in larger systems. They can start small and migrate upwards, as needed," Herold remarks.

Russ Sagert is the Petrel portfolio manager for Schlumberger Information Solutions (SIS), which has an alliance with Microsoft. He emphasizes that there are several aspects at play as companies begin choosing what computing solutions to adopt and how quickly. "When new technology solutions become available, not everyone can afford to change at a moment's notice," he points out. "The move from one type of computing approach to another must be rationalized from a business perspective. So, we will continue to have a wide mix of computing solutions at play. However, it has become very difficult to rationalize maintaining a PC environment for business computing and UNIX/ Linux for technical computing. From an enterprise-wide perspective, this is a substandard solution, particularly given today's competitive marketplace."

There is more business requirement than ever to find one approach that will let us blend our gigabytes of accounting data and petabytes of technology data on one system – business and technical," Sagert continues. "The common environment that most companies are choosing is Windows. Even 18 months ago, no one in the oil and gas industry would have said this would soon be possible, but it is."

The distinction between what has long been thought of as a technical workstation and a personal computer has blurred, since the latter can now be powered by 64-bit processors. From Sagert's perspective, given that the Windows operating system is available for HPC, "there is no reason not to take desktops into parallel computing." But what does this mean for the existing Linux capability provided through centralized computing services at many companies?

Wes Shimanek, Global Petrochemical Industry Manager, Intel Corp., describes the new capabilities as "introducing the opportunity for a new layer in performance." As the price for computing processors continues to drop as performance grows, the only thing preventing increased performance from reaching the PC on the desktop, at the workgroup and department levels, is the operating system.

Sagert agrees. "Most E&P workgroups don't run three-million-cell jobs. They usually run one- to two-million cells or less for simulation grids or geological modeling, which they will be able to do via Windows Compute Cluster Server virtually overnight. They will have quicker, cleaner access to compute power inside the workgroup. The absurdly large 29,000-node cluster projects will still access central computing or external services for this level of processing requirement."

A few of the extremely large oil and gas companies employ a centralized business model, but most E&P companies use an asset-team business model. This includes companies the size of Chevron and Shell down to the smallest independents. Accessible HPC in a collaborative technical computing environment promises to bring a step-change in performance to these asset-based firms, the ones that are focused on optimizing end-user decision-making.

THE NEW OPPORTUNITY

At the end of the day, E&P companies are looking for two things in order to thrive in today's marketplace:

- Increased productivity from their people that will, in turn, enhance reservoir productivity

- Agility or market responsiveness.

The ability to bring all aspects of an oil and gas company together in a unified computing environment driven from the desktop will result in better output from each discipline (geophysics, geology, engineering, accounting, finance, etc.) and better integration among them. Suddenly, the bulk of the scientific tasks are "just easier to get to, the workflow loop is optimized," adds Sagert.

Coldren's Kenney says "deskside HPC is not eliminating our need for centralized, high-end computing centers; rather, the distribution of tasks is simply shifting more toward the individual or workgroup so that we can turn around a project more quickly and perform better science. It's really a time management issue as technology ‘trickles down' to independents. Big companies get priority at the processing shops and presently these shops are very busy. Often there is a need to iterate certain types of seismic processing. For instance, if we reprocess, re-bin and merge 3D input data, certain processing jobs need to be re-run."

"On an average day, between administration of email, data QC, 3-D interpretation, meetings and mission tasks assigned by the boss, it becomes a challenge to squeeze more efficiency out of a workday," Kenney continues. "However, with the emerging software that is being coded for 64-bit processing, geoscientists can leverage these tools to add efficiency and find more oil and gas. So, this is a very appropriate tool to aid the geoscientist."

The same is true for reservoir engineers. As David Gorsuch, SIS' portfolio manager for reservoir engineering, suggests, "the increased availability of low-cost computing power allows engineers to consider modeling the reservoir at the desktop the way we've wanted to and should do to get the geologic detail desired and the proper representation of fluid flow hydraulics. We have been doing the right kind of modeling for oil-in-place for some time, because this is a static look at a reservoir, but to monitor production requires a dynamic model, which means significant computing intensity. With the ever-growing volume of data that all E&P disciplines have available for more in-depth analysis, the computational need continues to expand."

"Now we can begin to explore solutions for reservoir production and look at field variability thanks to the advent of cost-effective, powerful hardware and software solutions that are scalable at the workgroup and desktop levels," Gorsuch continues. "This holds true within such small- and medium-sized operations, as well as for small groups within large companies – reservoir engineers typically are very small groups."

Gorsuch further illustrates the potential for scientific and workflow advancement that having affordable HPC scaled to the workgroup level allows. "It's quite poignant that reservoir engineers have the opportunity to catch up to the geologists," he reflects. "Geologists have for some time been able to run many, many modeling iterations and output static views of reservoir models right on their desktops. Reservoir engineers have never been able to keep up with their volume of output because we are operating with one or two orders of magnitude more computing draw, which has required support from larger computing centers or high-end clusters. The personal HPC systems are making uncertainty analyses, production modeling and other advanced reservoir engineering processes a realistic, practical option for small workgroups and firms."

"While our industry has been moving in this direction in the last decade, the cluster systems we've had to use have been complex and expensive to set up and maintain," adds Gorsuch. "Now, cluster-based operating system and affordable 64-bit processors are providing new opportunity for us all. You can get a homogeneous environment and eight dual-core processing machines for a few tens of thousands of dollars that provide enormous compute capability at the workgroup level, but it is going to take us a while to figure out how to use this new capability."

ABSORBING THE OPPORTUNITY

What new functionality and techniques will be created as the new cluster technology is absorbed? It is one thing to add computational capability, it is another to increase one's capacity to understand and apply the output. Early on, the new HPC cluster capacity will be absorbed for already existing E&P techniques, but new ones will soon emerge. What will they be?

One aspect that is sure to change is computing visualization. Certainly, visualization has been seen as a natural link among the E&P disciplines. While engineers and geoscientists tend to measure and describe various physical aspects of the earth in unique ways, common ground has been found by using earth models to integrate and visualize the output of each discipline. "When collaborating around multi-million-cell models, we must be able to absorb the implications of the output," says Gorsuch, "so, clusters or parallel architecture must not only do the sums, but also be a vehicle to understand what you've worked out."

Hardware and software providers are simply creating vehicles to deliver solutions, and the vehicles will increasingly become transparent. The new HPC solution at deskside is no different. The industry will apply parallelization to those software applications that it best fits, and increasingly so, now that hardware and software capability have come together to provide essentially supercomputing functionality at a reasonable cost.

We are in a world where when the compute burden is high, it is natural to ask: should we go parallel, and if so, just how fast will workgroup and deskside HPC be absorbed by E&P? Some indication comes from IDC's market research. Looking at E&P software trends, IDC has reported that while the majority of code at year-end 2005 was about five years old, there is already significant movement in scaling this code to 1,000s and 10,000s of processors – cluster environments.3

Calling the cluster capability "a disruptive force" in computing solutions, IDC points to strong growth in computing at the workgroup or department level – up 32% in revenue in 2004 compared to 2005 for systems costing under $50,000. In December 2005, IDC predicted that more than half of HPC solutions would be generated by cluster-based solutions within a year, and that clusters would continue to drive growth in the technical server market.4

Clearly, each E&P company will make its own determinations. As with all technology breakthroughs, it is the way people actually apply it on the job that ultimately uncovers new applications and the value it will afford.

LITERATURE CITED

1 IDC's Worldwide Technical Server QView Q2, 2006.

2 IDC Special Study, The cluster revolution in technical markets, No. 06C4775, May 2006.

3 IDC White Paper sponsored by Defense Advanced Research Projects Agency, Council on Competitiveness, Study of ISU's serving the high-performance computing market: the need for better application software, No. 05C4552, July 2005.

4 IDC, Worldwide Technical Computing Systems 2006-2010 Forecast, No. 201733, May 2006.

|

THE AUTHORS

|

|

Mark M. Ades is a high-performance computing solutions specialist for Microsoft's Oil & Gas industry. He has more than 23 years' experience in seismic acquisition and processing, including management positions with WesternGeco and Exploration Design Software and various international roles with Western Geophysical. He has also provided independent consulting services to oil and gas companies. Ades holds a bachelor's degree in geology from Centenary College and actively participates in the Society of Exploration Geophysicists.

|

|

|

Marisé Mikulis, energy industry manager for Microsoft, has more than 20 years of diverse oil and gas industry experience. She was previously chief marketing officer for asset management systems supplier Upstreaminfo. Before that, she held management roles with Petroleum Geo-Services, POSC, Digital Equipment Corp. and Superior Oil Company. She graduated cum laude with a bachelor's degree in mathematics and geology from Tufts University and has completed executive coursework at Jones Graduate School of Management at Rice University.

|

|

|