What’s new in exploration

Also known as cognitive computing, artificial intelligence (AI) is often spoken of as the nirvana of processing mass detailed information, in a faster, better, cheaper way. True. But exploration is not done in a room full of random actors. AI cannot replace deductive reasoning, the true power of an explorationist—being able to jump to a reasonable, low-risk, complex conclusion without all the needed data.

Neuro-logic definitions include everything from Siri, autos and airplanes, to Japanese toilets. SPE declares [April 2018] that 94% of execs will use AI for production control and decisions. Neuro is never better than the equations and assumptions in the coded program or required data input. Neuro computing should only be a reference to machine-learning that is closely supervised, not to a self-aware robot with “rights.” AI might replace what you do, but it can never replace you. Perhaps.

We were seduced in the 1990s, morphing geologists as 3D conceptualizers into becoming companies’ workstation “geophysicists.” The value of geophysical-geos was lost on management, eliminating advantages that math-based geophysicists provided to interpretation. Interpreters were required to work late nights, generating push-button, brightly colored maps. Math geos were sent to run field crews on the front end of exploration or processing special projects at the tail end.

Interpreters lost understanding of the limits of data and methods. Poorly human-reasoned dry holes almost killed rank exploration by 2001. A V.P. friend got “retired,” after his under-five-year Gulf of Mexico (GOM) team drilled six, $50-million, look-a-like dry holes in a row. No longer does Exxon or others assign new hires to the field, then a year in processing, then to interpretation. So, are we morphing a new E-child from AI software?

All is not despair. ConocoPhillips uses widespread, but people-driven AI. I see other companies swinging back to including math geophysicists, along with geologists, petrophysicists, and reservoir fluids engineers, as a team, training AI computing cycles.

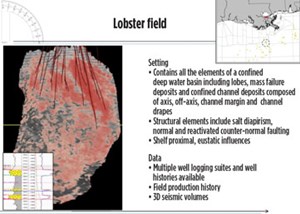

SEG’s June Leading Edge discusses machine learning with petrophysics results. It’s useful, but hardly the scale of exploration seismic resolution. One UT-RPSEA project pioneered an AI effort, using gigabytes in a predictive neurologic solution scheme to derive dynamic engineering data [fluid flow parameters] from 3D seismic. This was an attempt to develop a reservoir production tool for EOR, to find economically attractive clone prospects, and to evaluate M&A possibilities, Fig. 1. Marathon and partners provided licensed 3D data and well control over the GOM Lobster field. Lobster is a classic dipping turbidite reservoir that has complex layers of deposition. Maybe we could have chosen a simpler field to model.

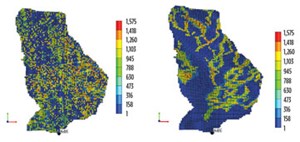

A second illustration looks at AI simulation problems, Fig. 2. Author Sanjay Srinivasan comments, “the model of the Lobster data, on the left, is generated using a traditional two-point statistics-based algorithm (SISIM) and the curvilinearity of channels is not preserved in the model.” Therefore, the common algorithm was improved after multiple iterations, per the model on the right. Both used the same data set as input. Training algorithms matters.

The UT project spent years attempting to train an AI program from multiple formats of 3D, well logs, and pressure/time/production data. Each investigator assumed the input from others was perfect, theirs with limitations. The truth? All had limitations. The one problem that we could not overcome was the lack of enough dynamic data to calibrate the geology or reservoir parameters. All machine learning programs rely on someone knowing the correct or near correct answer as input. Do you see a problem with that? ![]()

- The reserves replacement dilemma: Can intelligent digital technologies fill the supply gap? (March 2024)

- U.S. producing gas wells increase despite low prices (February 2024)

- U.S. drilling: More of the same expected (February 2024)

- What's new in exploration (January 2024)

- Dallas Fed: E&P activity essentially unchanged; optimism wanes as uncertainty jumps (January 2024)

- U.S. upstream muddles along, with an eye toward 2024 (September 2023)