How to manage software risk

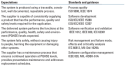

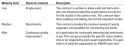

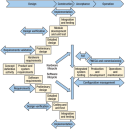

Software changes happen frequently, making this invisible risk an integral part of an asset’s life that must be identified, quantified and mitigated.Bill O’Grady, Athens Group Mission-critical processes now rely heavily on automated and highly integrated control systems software. This increased reliance on automation introduces invisible, but very real, software risks during the building, operation and refurbishment of high-specification offshore assets. Lack of standard software engineering practices, inadequate software specification at the contract phase, insufficient tracking of requirements and design, incomplete testing and unmonitored vendors leave offshore assets highly vulnerable to risks associated with software failure. The process for managing these risks is very different from that used to minimize hardware-related risks. This is both because software is invisible and because it is dependent on other systems—it does nothing until connected to one or more pieces of hardware. Because of these characteristics, software needs to be made “visible” through software-specific contractual language. This sets the stage for software risk assessment and mitigation efforts to begin in the design phase and continue throughout the asset lifecycle. This approach ultimately leads to safer drilling operations and higher-performing, more reliable assets as compared to risk mitigation where software-specific audit and test requirements are not stated and software risk is an afterthought. VISIBLE FAILURE RESULTS When software risk is not properly managed, software-related issues can increase project costs significantly. In the worst-case scenario, they can cause serious safety issues. Loss of life. A control system failure occurred on a large, offshore construction vessel. In an attempt to correct the failure, two control units were restarted twice, unsuccessfully. A blinking red lamp on the programmable logic controller (PLC) indicated that a memory reset was required, even though a memory reset had never been requested by control system diagnostics during equipment operations. As soon as the hydraulic power packs started, a loud bang was heard. A quadruple joint of pipe had dropped about 1 m to the welding deck below. Then, all clamps in the pipe elevator released and the hydraulic safety stop swung away, resulting in the discharge of a second quadruple joint of pipe. This second pipe joint fell through the full length of the tower, smashing through a crowded access platform to the deck below. The impact threw several personnel overboard, killing four workers and injuring four more. Investigation revealed that the incident occurred because the initialization instruction to open all clamps had been incorrectly pre-loaded in the PLC erasable programmable read-only memory. Reduced software quality. A series of vendor software process assessments (VSPAs) over the past several months identified hundreds of issues in the software development, testing and quality processes that could impact project quality, schedule or performance. Seventeen percent of these issues were related to project management, 12% were in the area of customer involvement, 1% were related to supplier management and 9% dealt with other activities. The majority of issues (61%) were related to vendors’ inability to provide thorough, requirements-based testing and auditing of the control system. Since the typical vendor contract does not require specific auditing and testing activities (or include an issue-correction mechanism), the vendors were under no obligation to address any of these issues. Increased project costs. Software-related issues can also increase construction costs. When multiple assets are being built in sequence, failing to identify and mitigate risks early in the lifecycle causes the problem to be replicated on each asset in the fleet. For example, if discovered late in the newbuild cycle, an anti-collision system omission on a pipe racker can cost up to US$32 million to correct across four assets. Factors that can be used to quantify the cost of discovering and correcting software-related issues after acceptance include: costs to remain in the yard after contract end/sail date; projected day rate while drilling; spread rate. Other factors are day rate for equipment vendors to fix problems after acceptance; day rate for third-party contractors; lift ship day rate; minimum number of days needed to transport replacement equipment; and minimum number of days needed for commissioning. PRACTICES TO REDUCE RISK The following factors are key contributors to software-related safety incidents and project delays: 1) not starting risk assessment and mitigation soon enough in the project lifecycle; 2) incomplete factory acceptance testing (FAT), commissioning and other testing; 3) lack of proper software configuration management (SCM) and alarm management; 4) insufficient auditing processes; and 5) lack of follow-through. This section includes practices proven to help identify, track and mitigate software-related risks. The methods outlined here are designed to be implemented as part of a lifecycle approach in which the output of one step serves as input for the following steps; this approach can be utilized throughout the entire asset lifecycle. Effective software risk assessment and mitigation plans should include all of the following practices. Evaluate your contract. As mentioned above, a series of VSPAs revealed that the vendor processes were not sufficient to ensure adequate software testing and auditing. However, because software standards are not typically specified in contracts, the vendors were not required to address the issues that were identified during the assessments. It is crucial that contracts contain clear language regarding system requirements, audit and test activities, and performance expectations. Incomplete testing can lead to serious issues being overlooked, potentially resulting in equipment failures or HSE incidents. During FAT, testing was conducted on a series of automatic control sequences for a piece of stand-building equipment. The test was simply to get one length of pipe from the rack and do one make-up. The test scripts did not address most of the designed sequences and did not address error handling at all. The racker was bringing pipe from the fingerboards over to a safe area. While it was doing this, one of the FAT participants pressed the emergency stop, just to make sure that it worked. The piperacker stopped as expected, but when the test started up again, the racker had forgotten that it was supposed to be going to the safe area. Instead it went straight to the well center, which would have killed anyone standing in the way. Luckily, this issue was discovered during FAT but, because design sequences and error handling were not sufficiently addressed in the test scripts, it could have easily been overlooked. Contractually specified audit and test procedures would have required the supplier to test for this issue. However, most contracts are hardware-focused, and do not adequately describe the processes for effective auditing and testing of control systems software. The generally accepted best practice, backed by cross-industry standards, is that control systems software performance quality and HSE levels are validated through a combination of third-party auditing of the process by which the software is developed, tested and integrated; and directed and witnessed testing of the control systems software both prior to acceptance and then in the integrated control application. Because software on drilling assets is relatively new, the oil and gas industry has not yet incorporated these and other software-related best practices into contractual language. A strong software contract should include language that allows verification of the five critical expectations that drive system performance (Table 1); highlight the fact that systems are developed, deployed and operated on a lifecycle and then describe auditing and testing activities in relation to that lifecycle (Fig. 1); and specify industry standards and guidance that will be used to set and confirm expectations for ongoing performance, quality, health, safety and environmental (PQHSE) levels. Table 2 illustrates the maturity levels at which contracts can be written to achieve this goal.

Review requirements and design. It is not enough to make sure that system requirements are called out in the contract. Comprehensive requirements validation and design verification should be performed to help ensure that system requirements have been developed using a sound process. Requirements and design analysis also verifies that the design does in fact implement all of the requirements. Additionally, it provides the basis for tracking those requirements through the rest of the software lifecycle. Properly analyzed requirements and design documents and well-articulated contractual software standards ultimately help improve FAT and commissioning testing by helping to ensure that there are no errors in the requirements and design documents and that vendors are contractually bound to correct any issues that are identified.

During requirements validation and design verification, all pertinent project documentation (such as vendor functional and interface design specifications) should be collected and thoroughly reviewed to ensure accuracy. This review should also include end user manuals, maintenance and support manuals specific to the project and vendor software development processes related to the requirements and design phases. To help fill in any missing or unstated requirements, interviews should be conducted with all vendor and company operational experts. Then, each requirement should be reviewed to make sure it has been included in the FAT and in the commissioning and acceptance test plans. A comprehensive, organized log of control requirements for all equipment in scope should also be developed. This will help to ensure that the output of the requirements and design validation and verification exercise can be easily referenced throughout the asset lifecycle. For each requirement, this tracking system should contain a detailed definition, the original source (e.g., contract, user documentation), test plans/results of FAT, commissioning and acceptance testing, and the status of any related issues. Evaluate potential failures. Thorough FAT and commissioning testing should be accompanied by an operational failure modes, effects and criticality analysis (FMECA). The tragic safety incident involving four fatalities described could have been prevented by an FMECA of the equipment covering operational states and message flow. The purpose of an operational FMECA is to help eliminate failures that occur during operations by taking into account all types of failures that could occur throughout the lifespan of each piece of equipment. Performing an FMECA before acceptance helps to ensure that all software-related risks are identified, facilitating the development of comprehensive risk mitigation and failure remediation plans. If the FMECA identifies risks that can be reduced or prevented by correcting an issue, again, contractual software standards ensure that vendors are contractually bound to implement the changes. FMECA steps should include analyzing requirements and design specifications related to control systems and the drilling control network; reviewing the equipment and listing the effect and impact of potential failures; identifying how a failure would be detected; evaluating failure probability; evaluating and prioritizing the severity of the failure and its impact on safety and operations; calculating the criticality of the failure; identifying potential corrective actions; and assigning ownership of recommendations. Assess software development processes. A VSPA helps to further reduce risk by validating the process by which software is developed. This includes the software itself, as well as any patches or upgrades that are issued. A VSPA should be conducted early in the asset lifecycle so that any software process gaps that may affect the project can be identified and resolved before asset delivery or systems performance is compromised. As part of the VSPA process, the vendor’s high-level software engineering policies and processes should be extracted via informal presentations from, and interviews with, the engineering manager, software development manager and quality assurance manager. Software development lifecycle processes should be thoroughly examined, and available documentation should be reviewed. The application of the defined processes should be mapped to the current project development. Project artifacts should be reviewed to see how the processes covered above have been (or are being) applied to the project. Control systems requirements and design specifications should be modified based on the findings, and all resulting punch list items should be tracked to ensure that equipment will be delivered drill-ready and on time. Plan for configuration changes. The above practices reduce the risk of software-related incidents caused by improper testing, and they set the stage for correction of any future software-related issues. To identify and mitigate ongoing software risks, a software configuration management (SCM) plan should also be implemented. Information logged during requirements and design validation and verification helps to establish a baseline for comparison after software patches are installed, software is upgraded or configurations are changed. This baseline should be included in the SCM plan. SCM plans should include policies that ensure that vendor technicians follow a check-in process, provide notification before installing any software upgrades, and document all changes in system functionality that are expected as a result of those upgrades. SCM plans should also ensure that software changes are properly reviewed and understood before being approved; require that upgrades occur when the risk of impact on operations is minimized; and employ a standard format for documenting software changes. The policies should also compel vendors to properly test all affected systems once the software modifications have been implemented; require a demonstration by the vendor that the previous version of the software can be re-installed in case an upgrade fails integration tests or causes other problems; and specify that backup copies of the software must be created, stored on the rig and refreshed when software is updated. Mind your alarms. One of the most important ways to proactively reduce software-related risk is to assess the alarm system, including alarms that correspond with known software-related risks. Many times, critical issues are indicated by alarms that are improperly prioritized, improperly mapped or not mapped at all. As a result, they are not acted upon. A detailed alarm management process helps ensure that alarms are unambiguously annunciated at the right workstation and under the appropriate circumstances. Alarm management processes should include: 1) defining the requirements for alarm systems and reviewing the alarm database for accuracy; 2) creating a master alarm document outlining the tag, text, priority, significance and annunciation point for each alarm, any related equipment, detailed action required of the operator, remediation and mitigation plans, and emergency and maintenance contacts; 3) choosing system benchmarking methods and tools; and 4) conducting alarm systems training for all crew members. Continually review. Software-related risks are ongoing. To help maintain safe, reliable and highly functioning control systems software, software configuration and alarm management audits must be performed whenever there are changes to equipment, software version or configuration, operating requirements or standards, and crew members. Follow up. Assessment, testing and auditing all result in a list of issues that require resolution. All too often, these lists are shelved due to other priorities. There are many instances where we have seen an accident, fire or equipment failure that would have been prevented if an existing issue had been remediated in a timely manner.

|

|||||||||||||||||||||||||||||||||||||||

- Advancing offshore decarbonization through electrification of FPSOs (March 2024)

- Subsea technology- Corrosion monitoring: From failure to success (February 2024)

- Driving MPD adoption with performance-enhancing technologies (January 2024)

- Digital transformation: A breakthrough year for digitalization in the offshore sector (January 2024)

- Offshore technology: Platform design: Is the next generation of offshore platforms changing offshore energy? (December 2023)

- 2024: A policy crossroads for American offshore energy (December 2023)